When it comes to qualitative research techniques used in Conversion Rate Optimization, there are quite a few that give a good amount of insights on user reactions and feedback. One such method is usability testing.

However, as Ayat stated in her article titled 9 Tips to Conducting Accurate Qualitative Research, “qualitative research is only as good as the process and goals behind it.”

Coming up with the who, what, where, and how for the test is anything but simple. This might just be the reason why even the most experienced usability researchers often realize a mistake halfway through the test.

Usability testing calls for proper research before the ultimate test. There is a great need to define the scope of your test, define the goals and design relevant tasks for the test.

Goals are much more like the oxygen tank of any usability test, but it is the tasks that give direction and support. So understanding the usability goals is the first step towards coming up with effective tasks for the test.

Having said that, this article will dig deeper into the usability tasks and the procedure of usability testing, in particular:

- Measuring usability tasks.

- Types of usability tasks.

- What makes a good usability task?

- Steps involved in designing usability tasks.

But before we delve into the heart of the matter, let’s start by defining tasks in the context of a usability test.

Usability tasks: defined

Usability tasks can be simply referred to as assignments given to participants during a usability test. They can either be an action or activity you want your participants to indulge in during the test.

I think of tasks as stepping stones that participants have to take in order to make progress towards accomplishing the goal of the usability test. A usability goal can be defined as the purpose of your test. The most common usability goals are as follows:

- Effectiveness

- Efficiency

- Learnability

- Memorability

- Satisfaction

- Errors.

For usability tasks to be more understandable to your users, they have to be accompanied by scenarios —these are essentially stories that provide context and description that helps users interact with your product or service so as to complete the given tasks. Simply put, they help users perceive the purpose of the tasks they have to perform.

Kim Goodwin gives an extremely good description of scenarios in user testing:

Scenarios are the engine we use to drive our designs. A scenario tells us WHY our users need our design, WHAT the users need the design to do, and HOW they need our design to do it.

Example:

Scenario: You are supposed to board an international flight to London next week on Wednesday to visit a friend and you are planning to return on Sunday evening. To book a flight, you have to use a credit card.

From the above scenario, the goal of the researcher may be to find out the severity of the errors that users make when trying to book a flight using a credit card as a means of payment.

Drawing from the same scenario, the researcher may ask users to perform these tasks:

- Step 1. Sign up/login on the website

- Step 2. Enter the destination

- Step 3. Enter the date range

- Step 4. Make a booking

- Step 5. Review your booking.

- Step 6. Make a payment.

As you can see from the above example, tasks are arranged in a sequence that spurs participants into making a booking using a credit card. No matter the type of a usability test, the results accuracy is impacted by the structure of tasks.

Measuring tasks for a usability test

Each usability task can be assessed for effectiveness, efficiency, and satisfaction. Ayat published an article titled Usability Metrics: A Better Usability Approach, and gave out these six metrics used in measuring a usability task:

- Task success rate

- Time-based efficiency

- Error rate

- Overall relative efficiency

- Post-task satisfaction

- Task level satisfaction

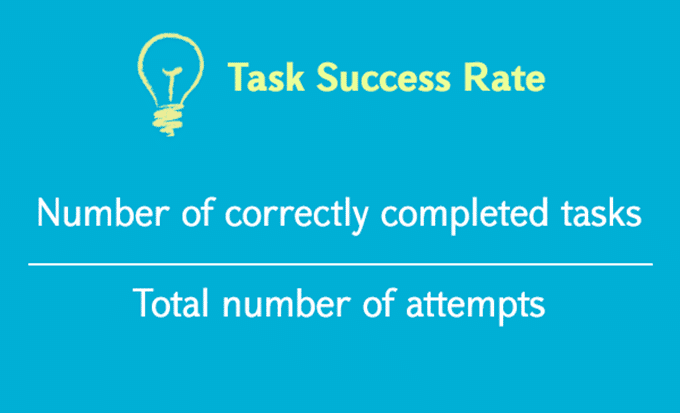

Task success rate

Being one of the most fundamental usability metrics, task success rate or completion rate refers to the percentage of tasks that users were able to successfully complete. This metric is measured at the end of the test.

Every researcher’s aim is for the success rate to be 100%, as it is the bottom line of every usability test. So in every usability test, the higher the task success rate, the better.

However, a study, based on an analysis of 1,100 tasks, showed that the average task success rate is 78%. In the same study, it was also concluded that the task success rate depends on the context in which the task is being evaluated.

The task success rate is calculated using this simple formula:

Image Source: Every Interaction

As easy to understand and calculate as the task success rate is, but what if a user partially completes a task, how then do you classify that? Do you consider it as a success or a failure?

This makes the scoring more subjective, what other evaluators may consider as success may be allotted as a failure to others. There is no right or wrong rule when it comes to this.

Similarly, Jakob Nielsen, co-founder of the Nielsen Norman Group, says that although this depends on the magnitude of error, there is usually no firm rule when it comes to scoring the partial success.

But to make it as accurate as possible, each task has to have precise details about how to score success and what determines partial and complete success.

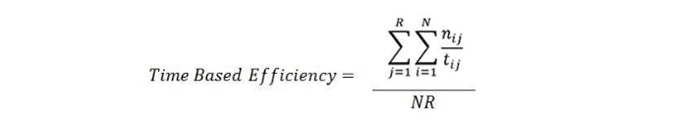

Time-based Efficiency

This is the average time that participants take to successfully complete each task or the speed it takes to finish the task.

Time on task is good for diagnosing usability problems —you can tell that a user is having difficulty in interacting with the interface by the amount of time they take on a task. To come up with time on a task, you can simply use this equation:

Time on task = End time — Start time

Now that you know how to calculate the time on task, the complicated part is coming up with the time-based efficiency. The formula for calculating time-based efficiency may look intimidating but it is understandable once you insert numbers.

Where:

N = Number of tasks

R = Number of users

Nij = The result of task i by user j; if the user successfully completes the task, then Nij = 1, if not, then Nij = 0

Tij = The time spent by user j to complete task i. If the task is not successfully completed, then time is measured until the moment the user quits the task.

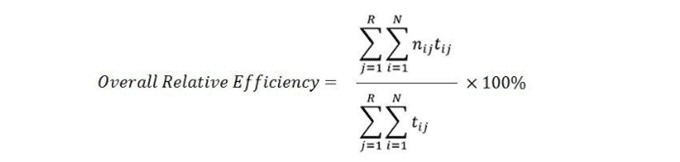

Overall relative efficiency

This is the ratio of the time taken by users to successfully complete a task in relation to the total time taken by all other users. It can be calculated using this equation:

Error rate

This metric involves counting the number of errors made by participants when they were attempting to complete a given task. For many researchers, counting the number of errors that users make may be daunting, but this metric provides excellent information on how usable your system is.

But to help you measure this metric and obtain valuable insights from this metric, this is what Ayat recommends in her article; “Set a short description where you give details about how to score those errors and the severity of certain of an error to show you how simple and intuitive your system is.”

Post Task Satisfaction

As soon as your participants finish performing a task (whether they complete them or not), they should be handed a questionnaire so as to measure how difficult the task was. This post-task questionnaire should have about 5 questions, and it should be given at the end of each task.

Task level satisfaction

At the end of the test session, you can hand a questionnaire to every participant just to get an overall impression of the level of satisfaction they got from using the product you are testing. The type of questions you will ask participants hinges on the amount of data you want to collect, they can either be open, closed-ended questions or use both.

Types of usability tasks

Usability tasks are either open-ended or closed-ended in nature. The decision on which type of task to use usually depends on the objectives of your test —once you have defined your test goals, then you can decide on the type of task to use.

However, Khalid Saleh, the CEO of Invesp, suggests that for a usability test to be effective, there is a great need to find “middle ground” and make use of these two types of tasks in a single usability test.

Whether its customer interviews, polls, usability tests, surveys, focus groups or any other qualitative research method we conduct, we often use open-ended and closed-ended questions in unison at Invesp. This always gives the best value and a natural rhythm to the flow of our research.

Open-ended Tasks

As the name suggests, open-ended tasks are more flexible, designed with a minimal explanation on how to complete the task.

They encourage an infinite number of possible responses, and this means that your participants can give you answers they think are relevant. According to Susan Farell from Nielsen Norman Group, open-ended tasks invite participants to answer “with sentences, lists, and stories, giving deeper and new insights.”

Examples

Scenario: You are a frequent moviegoer who wants to have text message updates about movie premieres so that you won’t have to search for them on a daily basis.

Goal: the researcher may intend to see how satisfied are the users when they are using the product on the test. In this context, here are some of the open-ended tasks that researchers may ask users to perform:

- Please spend 6 minutes interacting with the website as you’d normally do.

- Use this mobile application for six minutes, and make sure you get notifications movie premier notifications sent to your phone inbox.

Or let’s say you have just designed an e-commerce website and your goal is to find out if users can navigate through the site’s checkout flow without getting distracted by certain elements. As an open-ended task, you can have this:

- You have been awarded a $90 gift card that can lapse in the next 10 minutes, use it before it expires.

From the above examples, you can notice that the tasks are ongoing, they do not have a clear end-point. Individual participants will approach and complete them differently. Basically, one of the things that makes a task open-ended is its ability to achieve the same thing using various methods.

When to use open-ended tasks

Identifying Usability Bottlenecks: If you intend to find any elements that confuse your users as they interact with your site, giving them a license to roam around freely on your site will help you uncover some deflecting issues that you were oblivious to.

Discovering New Areas of Interest: Encouraging unanticipated answers, through the use of open-ended tasks, can be valuable at times — users can give creative answers and in the process, you can discover something completely unique and unexpected.

Deciding on products to prioritize: Sometimes usability tests can do more than fishing out a website/app’s usability issues. With the use of usability tasks, you can strategically use a usability test to figure out your users’ areas of value.

Closed-ended tasks

Also referred to as a specific task, this kind of task is goal-oriented and based on the idea that there is one correct answer. Participants are guided with precise details, exact features to focus on and how they are supposed to interact with the interface.

With specific tasks, your test focuses on the exact features you want to research on. They are wonderfully effective at making the whole session easy for participants even though they bias users into giving preconceived answers, Susan says.

Examples

Scenario 1: You have to transfer $2,000 from the bank to your friend’s account but your schedule for the day is tight. So instead of going to the bank, you have opted to make the transfer using your smartphone. Use XYZ as your account number and ABC as your credit card details.

Goal: in this case, the researcher may seek to find out how efficient is it for users to make any bank transfers using their mobiles.

Tasks

- Click here to open the website.

- Enter your name and use your account number as your password.

- Click on the ‘make a transfer’ section.

- Enter the amount of money you intend to send.

- Enter the details of the person you intend to send to.

- Press the preview button then confirm.

Or imagine you have just finished working on a new website and you’d want to validate the first impression that your website gives, and so you conduct a usability test to see what participants think about your web pages. Here’s a closed-ended task you might use:

Task: Use this link to visit the website, view each and every web page and click on the CTA button on the Home Page.

From the second example, the researcher specifically wants to observe what kind of experience do the users after using the site. Notice how the task instructs the participants on how to interact with the site.

When to use closed-ended tasks?

Testing Specific Elements: Suppose you just added a live chat feature on your website and you’d want to test for its usability. In this case, you can give your participants specific instructions on how to use the live chat feature and ask them for their experience after the test.

Complicated Products: Not all web-products are easy to use at first glance. Some websites are weird and some are just non-traditional. Using closed-ended tasks in such instances will help advise your users on how to navigate through it.

Optimizing Pages: If you know that, through Google Analytics, your website has a high bounce rate, you can use closed-ended tasks to watch them go through the site and afterward, you can ask them about the challenges they may have faced. This will help you fish out the conversion problems and help you understand why they are bouncing.

What makes a good usability task?

Defining the goals of the test and moderating the test is the easy part of a usability test. The challenging part is to recruit participants and to come up with effective tasks that mimic a real customer journey as much as possible. This is how Elena Luchita says about usability tasks:

You need to make sure your test is well-structured, and the tasks you write are readable and easy to understand. It’s simple to collect skewed data that validates your hypotheses yet isn’t representative of your users.

It’s much easier to recruit wrong participants and to make mistakes when writing usability tasks, most researchers can testify to this. There is a lot of consideration to be done. The Nielsen Norman Group considers writing good tasks for a usability test as much of an art than it is science.

So, regardless of the type of usability test, here are some of the universal characteristics that make a good usability task:

- Use Actionable Verbs

- Use the user’s language, not the product’s

- Simple and clear

- The task has to be realistic

- Short and precise

- Avoid giving clues

Use Actionable Verbs

Whether the task is open-ended or specific, it’s best to persuade your users to take action to perform it, rather than asking how they would do it. One way of doing this is by including action verbs in your tasks.

Here is a list of action verbs that can be used in a usability test:

- Download

- Buy

- Subscribe

- Find

- Click

- Complete

- Sign-up

- Register

- Log-in

- Invite

- Create

If they can be actionable tasks in user testing, then there can also be non-actionable tasks. The difference between the two is that actionable tasks prompt readers to do while non-actionable tasks encourage users to answer in words.

To show you what I mean, let’s say you are conducting a usability test to see if users can find a verification code in a link that is in your email marketing message.

An actionable task will look like this, open this email and find the verification code, whereas a non-actionable task can be crafted as: how would you find the verification code in this email.

Seeing participants figure out how to complete a task through their actions will allow you to observe their reactions, and it will give accurate insights on how users operate your product. Besides, you are likely to get inaccurate data if you depend on your users’ self-reported data it’s better to watch them express their words in actions.

Remember that usability testing is premised on the idea of observing what participants do, not what they say —so whenever you find your users answering your tasks in words, just know that your tasks are not actionable.

Use the user’s language, not the product’s

When I was in my final year at college, I remember attempting to read a friend’s medical textbook, I couldn’t go beyond one page. I was frustrated by too much jargon clustering the book. The diction used in the book was meant for students studying medicine —for a journalism student, it wasn’t easy to perceive the intended meaning of some paragraphs.

Here is what Tim Rotolo, a UX Architect at TryMyUI, says about language when writing a usability task:

The main thing to remember is that writing good usability testing tasks comes down to communication. Your tasks have to make sense to the average user. Common language that is accessible and easy to digest is superior to very technical, stiff writing. Don’t talk like a researcher; be clear and straightforward.

So, when it comes to writing usability tests, you don’t want your participants to ever wonder “what does this mean?” It is a fatal mistake to assume that participants will understand your industry terms. It’s best to make tasks that speak to your users and not at them. Any misunderstanding could lead to fabricated feedback.

To show you what I mean, let’s say you intend to design a new interface for sharing articles and you conduct a usability test to find out which icon will be the easiest for your users to understand. A task with a user-centric language will look like this:

Have a look at the options below. The icons allow you to share the entire article with people. Choose the options that seem like the most intuitive icon for that action?

Needless to say, the wording of your task will impact the test outcome. Either negatively or positively. When writing tasks, make use of words that resonates with your participants —as the saying goes, “focus on the end user, and everything else will follow…”

Simple and clear

Forget valuable feedback if your tasks are not simple and clear to the participants. If your tasks are not clear to your participants, the outcome of your test may carry no weight. As you design your usability tasks, consider clarity as an important element.

In every detail of your task, you should be precise. As much as you may want to observe how the participant uses your product, your tasks should be detailed in a way that your users may understand.

A good usability task has to be simple to understand but not too simplistic. Researchers have to strike a balance between the two. Participants have to understand what they are supposed to do, but the task shouldn’t lead them to the answer straight away.

Make no mistake of leaving your participants wondering what the assignment is about. If possible, make sure that they understand the tasks at hand before they do anything.

The task has to be realistic

Making tasks realistic is one of the things that is often overlooked when writing tasks for a usability test, and to be honest, this is pretty ridiculous. For insights to be accurate, the environment has to be as natural as possible and this also implies that the tasks have to mimic a real-life scenario.

For instance, if you coordinate a test with the goal of finding out how long will it take for your users to find a product on your e-commerce site, it’s important to make sure that the participants you recruit use online stores to purchase products.

Asking participants to do something they don’t usually do will cause them to fabricate the feedback. Your best bet in soliciting reliable feedback is to give your participants that sense of being in charge and owning the task.

Give no hints

Why conduct a usability test when you give clues on how to use the product on a test? Isn’t it best to watch them use a product in a way they deem fit? Giving clues or asking leading questions ruins the prime idea of the study and it prompts users into doing things the presumed way.

For Example, Imagine you conduct a test with the intention of finding out if users can notice important elements on your website.

In such a scenario, if your task tells your participants which elements to focus on, then it’s as good as controlling them.

Poor task: Go to the website, log in and subscribe for the weekly newsletter.

Good task: Identify information that you consider to be useful on the site.

Although giving clues may rob us of the opportunity of understanding our users’ thought process, at times it’s not easy trying to replace the standard words which are used in the interface. So, to avoid confusing participants, at times it’s acceptable to bend the rules and use words on the interface.

Be short and precise

Jakob Nielsen’s seminal web usability study of 1997 concluded that 79% of test users always scan the pages and only 16% read word-by-word. This is a reality you should work with, instead of fighting it.

How then do you work with it?

Make your tasks brief and precise. Minimize the time that your participants need to read and understand them. Lengthy tasks don’t just take undue time to read and understand them, but they may influence the overall time that users take to complete a task.

It’s best to provide participants with necessary details ONLY, anything outside the context of what you want to test is unnecessary and irrelevant. Strike a balance between keeping the task short and detailed.

Okay, here is what I mean —The year is 2019, and Samsung has just released the Samsung Galaxy S10 and you are interested in it. Log in the website, select the phone and add it to the cart.

5 Steps involved in designing usability tasks

By now you probably know the relevance of tasks in a usability test. So to ensure that you know how to write effective tasks, let’s take a closer look at the steps involved in designing them.

Step 1: Align your tasks to your goals

By the time you decide to design usability tasks, we assume that you already know which area of your system you want to test, and what goals are you trying to accomplish. The essence of any usability test (whether qualitative or quantitative) is to turn your goals into usability tasks.

For instance, if 60% of your users are exiting on the checkout page of your e-commerce website. Your goal for a usability test may be to find out why they are leaving on that page, right? So, your tasks should urge your participants to go through the checkout page.

If your tasks are off-track, everything about your usability test can go wrong without you even realizing it.

Step 2: The scenario has to be relatable

One of the reasons why researchers make use of scenarios in user testing is so that the participants are in the right state of mind before they perform any given task.

Presenting a scenario that participants do not relate to can confuse them and lead to misleading results. For your users to genuinely engage and interact with your interface, your scenario has to be something practical in their own world.

You can tell the task scenario is unrealistic if users seem confused or ask for any kind of assistance on how to handle the task required of them.

To make sure that your task scenarios are relatable, the participants you recruit should be knowledgeable about the elements you intend to test. For instance, if you are testing an e-commerce website you make your task scenario relatable by recruiting participants that are well-versed with online shopping.

Step 3: Decide on the format of your tasks

Aligning your tasks to your goals is vital, but selecting the right type of task is as crucial. Each usability test has its own subject matter, and as such, the approach in terms of the format of the task can never be one-size-fits-all.

As mentioned earlier, the two formats of usability tasks are open-ended or specific. You need to decide on the type to use between the two.

Step 4: Organize the tasks in order

One of the things you want to avoid during a usability test is to confuse your participants. Just like in any other field, a change in a sequence has an effect on the outcome. In usability testing, there is a high chance of acquiring distorted results if your tasks are not presented in a logical order.

Tasks are usually presented in order if the format used to design them is a specific one. In such scenarios, participants are required to go from one step to the other. However, in open-ended tasks, it may not be necessary to articulate the tasks in order.

Step 5: Evaluate the tasks

One of the reasons why expert researchers notice mistakes when they are midway through the test is because they are too reluctant to verify the tasks.

Evaluating your tasks is the final step that must be taken before the process of designing usability tasks is complete. Evaluating your tasks will help you determine whether or not your tasks adhere to your goals.

Be open-minded during this stage, as there may be changes you could make on the tasks to improve the effectiveness of your usability test.

That’s a wrap

Your tasks can either make or break your task. It takes one wrong task to poison a well-strategized usability test. There is a great need to be prudent when designing effective task so as to avoid any bias creeping in.

Every human is prone to mistakes, this is why it is highly recommended to evaluate your tasks before the actual user testing session.

If your task scenarios resonate with your participants, then you have higher chances of obtaining valuable insights.